Cyberpunk, Compounding Complexity, and Choice

The sky over the port was the color of television, tuned to a dead channel.

William Gibson - "Neuromancer" - July 1, 1984

Looking Back

I don't remember exactly when I read Neuromancer for the first time, but it was a very long time ago; probably in the early 90s. Like so many of us, the story drew me in and sparked my imagination. Gibson's work can probably be credited in part for the career and life that I have today. Little did I know then how prescient his story would be when I first read it...

A few weeks ago I was working with my favorite Agentic AI Assistant to create yet another application and had it connected to my browser. While it was working I opened up Google Gemini in a browser tab to ask a question, for the life of me I can't remember what. As I was typing away, Claude, being helpful, tried to examine the page. Something curious happened: Gemini "fought back". It wasn't actually Gemini the LLM (of course), but the detection of the browser automation and inspection caused the page to "stop working". It was beyond the basic browser automation detection notifications I have begun to see more frequently. The experience of AI "fighting back" reminded me:

William Gibson's Sprawl Trilogy: Neuromancer (1984), Count Zero (1986), Mona Lisa Overdrive (1988), and subsequent works were the birth of Cyberpunk, a genre that has since become synonymous with the future of technology and society. At the core of the story is the emergence, evolution, and transcendence of Artificial Intelligence (AI) through the story of Wintermute and Neuromancer and their impact with and manipulation of humanity to reach their goals. The stories introduced virtual reality, "the internet", hacking, and the blurring of the lines between human and machine. Huge corporations were all powerful, and made even more so by their Artificial Intelligence (AI) systems. Intrusion Countermeasures Electronics (ICE) were the defense mechanisms that protect corporations, Artificial Intelligences, and their networks from hackers both human and machine. ICE wasn't just a software firewall, it is an active, adaptive, and intelligent agent that defends against intrusion and attacks. Sometimes with fatal results.

The LLMs behind Google Gemini, OpenAI ChatGPT, Anthropic Claude, and others are not (yet) using AI powered ICE technology to actively prevent browser automation and analysis (as far as I can tell), however the experience of being blocked from probing an AI chatbot while using an AI agent was one the many moments over the past 35+ years that remind me just how much Gibson got right way back in 1984.

The Past 3 Years

On November 30th, 2022, OpenAI released ChatGPT as an AI chatbot application to the (largely) unsuspecting world. I've been working with technology for a living for more than 30 years, and with data and AI for the past decade. I got my first paycheck in this field in the fertile and transformative days of the early internet. I had no idea what was coming as 2022 came to an end... In March of 2023 I pivoted hard and dove into "Generative AI" with a small handful of peers and we lived and breathed GenAI every day all day and night. We were the point of the spear and we helped to create successful products in market powered by this new technology before the end of 2023. We continue to do so today, albeit with an entire company along side. I couldn't have imagined the speed of change and the number of certainties that I've had to let go of in the last 36 months...

There is hype and anti-hype in the world of AI and it is important to remember that what we call AI today is fluid and ever-changing. There are people trying to sell you something, and they are frequently telling a story with an ulterior motive. That being said, the technology that they create and continue to evolve is not going to go away, and it keeps getting better, with capabilities doubling about every 7 months. We can do amazing things with what we already have, both using Frontier and open source models. The cat is out of the proverbial bag.

I personally don't believe that LLMs are what we will think of as "AI" going forward. I'm not prophesizing Artificial General Intelligence or Artificial Super Intelligence, and I don't believe LLMs on their own will ever hit those thresholds. LLMs are already becoming a part of a system of technologies and techniques that will deliver on many of the promises being made by Sam Altman, Dario Amodei, Sundar Pichai, and so many others. We are combining LLMs with "agents", "skills", "tools", "memory", and "knowledge" to create systems that continue to evolve and improve, most likely at a continuingly relentless pace, fueled by the flywheel they create.

Will you write this for me?

Today we can and do build software using AI agents with far more specificity and precision than most human programmers are capable of, and far far far faster than any humans are able to. Specification Driven Development (SDD) emerged in mid 2025, spurred into existence as a response to—or evolution of—the "vibe coding" movement first named in February of 2025 by Andrej Karpathy. We went from "fancy autocomplete", to "let me chat with you to sketch something amazing" that I would never want to support in production, to "Let me collaborate with an agentic AI assistant" to specify a solution so accurately and precisely that the generated solution is far better tested and designed and rationalized than any code-base I've seen or written in all of my years—in less than a year (8 months if you're keeping track).

Steve Yegge, who's "Blog Rants" were foundational to my early career, tells the story of our rapid progress over 9 months last year through the perspective of a "junior developer" and their more senior peers. He goes from fear for the junior developer to seeing the power they now have at their fingertips to focus on the actual problems they have to solve:

- The death of the junior developer - June 24, 2024

- Death of the stubborn developer - Dec 10, 2024

- Revenge of the junior developer - March 22, 2025

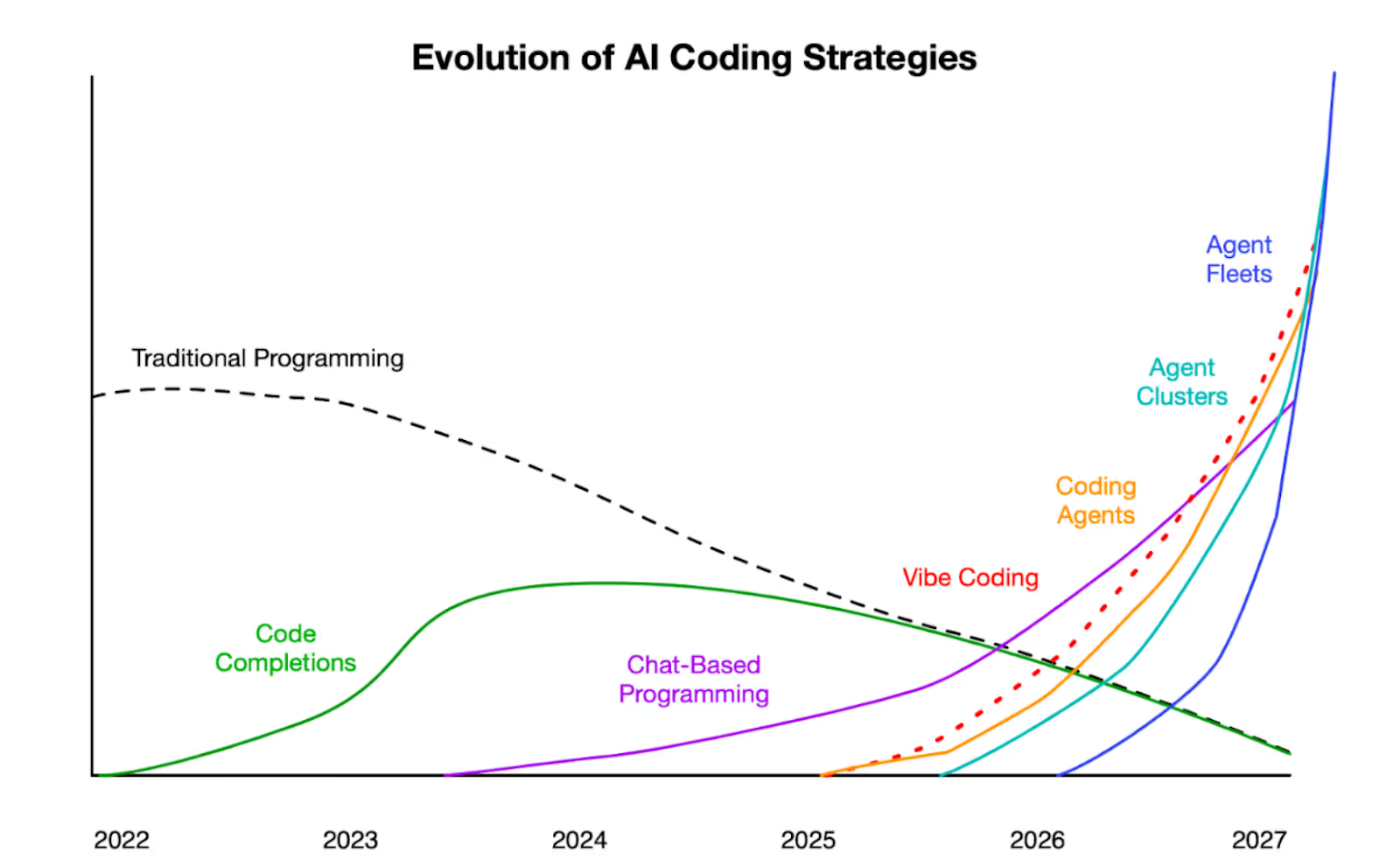

In his most recent piece "Revenge of the junior developer", Stevey presents the following timeline: "Coding Agents" will supercede "Traditional Programming" (which he predicts as declining) by (what looks like) the 1st quarter of 2026 (today is October 15, 2025), "Agent Clusters" shortly thereafter before Q2 2026 is over, and "Agent Fleets" by mid 2026, with the essential death of traditional programming by the time we hit 2027. It isn't clear what Steve means by Clusters or Fleets, but I routinely execute research, design, and creative tasks with 5-10 sub-agent workflows in parallel using skills and tools to accomplish work faster than I ever could imagine with higher quality and fidelity. My workflows validate themselves, and I have to keep a close eye and provide guidance and correction, but they keep getting better and better. The emergence of "skills" has helped to spur even higher quality and repeatable workflows that reflect on how they did and improve their skills as they iterate. I'm told that I'm in the "innovator" part of the technology adoption lifecycle, but I promise you none of this is something that is hard to do. Like so much it takes "letting go" and being open to change because it is happening regardless of what you want. The good news and bad news is that things are changing so fast that being an early adopter or innovator is easy due to the rate of change.

The "code" doesn't matter anymore...

In September and October of 2025, Anthropic launched a very interesting pilot for a product they called "Claude Imagine" demonstrating clearly the scope and impact of "Agentic UI". The core idea of Claude Imagine wasn't new, but the implementation and scale certainly was. We've been able to use AI to generate user interfaces at various levels of complexity from natural language prompts or specifications for more than a year now, but the approachability of the "Imagine" experiment is hard to explain. In 10 minutes my wife, who is "not a programmer" (her words), was able to create a software suite for her coaching business that could manage clients (CRM) and their engagements, invoicing, and scheduling, (but wait there's more) AND do training and curriculum development and management. In 10 minutes! Bypassing traditional SaaS providers and 10s or 100s of MRR a month taken back in 10 minutes, with infinite, self driven, customization going forward.

Sounds too good to be true, right? What's the catch? Anthropic chose to put this experiment out without "storage" or "api" integration, and if you close your browser it is all gone. Before you say "BOOOOOO!!!!!!!", take a breath! Anthropic could have included those, but they chose not to. "Imagine" uses MCP (Model Context Protocol) to interact with the browser and the server, they just chose not to include persistence or interaction with MCP servers outside of their experimental platform. Yet. And by the way, Anthropic isn't the only foundational model provider, hyperscaler, SaaS company, "Low-Code" platform, or open-source project doing this.

Before you point out that Anthropic and others are going to charge my wife just as much as traditional SaaS providers (and yes, currently does with its Pro licensing), consider this: it took about 12 hours to work with "Coding Agents" to create the same functionality as "Imagine" and then quickly move beyond it with the addition of calling other MCP tools for persistence and other capabilities and saving applications and more. The power of the Claude models is amazing, but it isn't the only provider in town, and it is going to be a race to the bottom on these capabilities and we already see a 10x reduction a year in token pricing based on MMLU score. With emerging spec-driven frameworks like GitHub Spec-Kit, Kiro, and the growing ecosystem of tools being released daily, specifications written in natural language can be implemented by any compatible coding agent using any LLM provider. We will soon see projects where only the specifications are published with the spec itself becoming the portable source of truth, with the generated code optional or even unnecessary. I expect that we'll even see "Slack me the spec" begin to be a real thing in 2026.

Complexity

There are many that claim that AI and Agents will never be able to successfully model, create, and maintain software because "software is too hard". Here is my take: complexity in software development is a choice based in the assumption that writing software is hard and the need for humans to fiddle with and "improve" things that they didn't create or don't like anymore. We are taught to abstract and driven to continually re-invent "new" and "better" ways to do things. This has led to continuous evolution of software that has been transformational over the past 181 years. I firmly believe it has gone more than one iteration too far.[1]

How many ways do we need to implement web form validation and how many times have you implemented a better way? When was the last time you or your team "did research and a spike" to "evaluate and select" a new library (or framework) that "does it better"? Did it?[2]

Which UI framework should you choose for your next project? What do you need to know to upgrade to react 15, 16, 27, 18, 19, ...? I'll wait.[3]

When was the last time you built a new framework, or build a solution that was completely extensible with interfaces and abstract classes and ...? I once had a candidate submit a coding example for simple application that should have been 20 lines of code with more than 20 files separate interfaces, abstract classes, and dependency injection, ... (YAGNI anyone?)

How do you run a Container on Amazon Web Services (AWS)? A Spark job..? What CIDR blocks and subnets do you need to use to set up your VPC so you can run your containers on AWS Elastic Compute Service? How about the first section of each AWS product developer guide starting "Ensure you have the required IAM roles for ..."?

This isn't just AWS, complexity is rampant in all of the hyperscalers, and many other SaaS providers. Choice is almost always sold as a benefit, but we know that too many choices lead to decision fatigue. New form platform providers like Cloudflare, Netlify, and Vercel along with the leading AI (LLM) providers (OpenAI, Anthropic) are simplifying platforms with great success by reducing the solution space*!

Human knowledge, skills, and personal preferences are the drivers of technology selection in almost every case I have seen in my 30+ years in this industry. I have been very guilty of "technology innovation" without a clear business problem. Until a handful of years ago I tried to atone as an architect trying to warn others so they could move from my mistakes. I've learned the hard way that many technologists really don't want to hear it.[4]

There are some, but vanishingly few places in the software world where the debate between what database to use, or what programming language to choose, or which CSV parsing library to use are just not important to innovate on. CSV libraries are a sore point for me, just like templating libraries. I once helped a team troubleshoot parsing CSV files from their monolithic codebase and discovered two different libraries and one hand written parser.

A New Hope?

With technology continuing to explode, and the ability of these AIs to write code and create software in any language, framework, runtime, or cpu/gpu architecture, using any library, any pattern, that explosion could continue and accelerate. Agentic AI can write code "to spec" and with far more test cases and coverage than you have ever imagined and do it faster than you or I can grok (no, not that Grok). Yes Virginia, you are going to spend the rest of your career trying to understand, map, debug, and fix the solution "the agents" created, but this time the only way to fix it is to explain it to them, ask them nicely to fix it, and plead with them to update the spec first!

There has to be a simpler way forward, a way to get to "Simple Made Easy", but for software build with AI.[5]

In June of 2024, as I was in the midst of an internal struggle about the drive for even more complexity in software with AI's "help" and the promise of "AGI" and "ASI" to come, I had a thought, or more of a question pop into my head that changed my perspective on AI and software forever. In the time between then and now I have traced that question to what I was lucky enough to experience over my career, curiosity, and opportunities. It came from a series of events and experiences that have connected to make me certain there is a better way to build software with AI's help.

The Question:

Ask yourself:

There is only one response to that question that I have heard in all of the times I've asked it over the past year and a half:

While "that is true", the response it means that you are not "seeing it".

That is a topic for another day.

BLN

Notes

-

The Second-System Effect - Fred Brooks warned us about this pattern in The Mythical Man-Month (1975): "The second system is the most dangerous system a person ever designs... The general tendency is to over-design the second system, using all the ideas and frills that were cautiously sidetracked on the first one." We've been iterating on web development patterns for 30+ years now, far beyond a "second system." Each new framework, library, and abstraction layer adds complexity while promising to reduce it. Brooks' observation about architects wanting to incorporate "all the things they didn't get to do the first time" applies perfectly to the endless churn of JavaScript frameworks and build tools. ↩

-

Form Validation: A Case Study in Unnecessary Complexity - Consider how many ways exist today to validate a simple web form:

- Native HTML5 validation (built into browsers since 2014)

- 30-50+ JavaScript validation libraries on GitHub (Yup, Joi, Zod, Vest, Vuelidate, Formik, React Hook Form, etc.)

- Framework-specific solutions (Vue Vuelidate, Angular Forms, Svelte forms)

- Backend validation libraries in every language (Express-validator, Django forms, Rails validations, etc.)

- Custom regex and validation functions written from scratch

HTML5 already provides required, pattern, min, max, minlength, maxlength, type="email", type="url", and the Constraint Validation API. For 90% of use cases, the platform already has a solution. Yet teams spend weeks evaluating, learning, and maintaining complex validation libraries that often break when dependencies update. ↩

-

The Framework Churn Problem: A Pattern We've Seen Before - We've lived through this cycle before. Remember Struts, Spring MVC, JSF, Tapestry, Wicket, and the dozens of other server-side frameworks that dominated the early 2000s? They were all perfectly functional. They solved real problems. Teams built successful businesses on them. And they're all essentially gone now, replaced by newer frameworks that... solve similar problems in different ways.

The JavaScript framework ecosystem is following the same pattern, but faster. The difference today is that the web platform itself has matured significantly:

- fetch API - native HTTP requests (no more jQuery.ajax or axios)

- ES Modules - native module system (no more RequireJS or Browserify)

- History API - client-side routing (pushState, replaceState)

- <dialog> element - native modals

- Web Components - native component encapsulation

- CSS Grid & Flexbox - native layout systems

- Promises & async/await - native async patterns

Many features that once required frameworks are now built into the platform. As LogRocket notes in "Can native web APIs replace custom components in 2025?", the platform has caught up to what frameworks pioneered.

The Critics:

- Alex Russell (Microsoft) - "The Performance Inequality Gap, 2024": Documents how JavaScript framework complexity has created a "performance inequality gap" where wealthy users with fast devices get great experiences while the global majority suffers.

- DHH (Ruby on Rails creator) - "You can't get faster than no build": Advocates for leveraging browser capabilities and avoiding complex build pipelines entirely.

- Allen Pike - "JavaScript Fatigue Strikes Back": Argues that despite a decade of progress, choosing JavaScript frameworks remains difficult. The rise of server-side rendering has created an "embarrassment of riches" rather than simplification.

- Chris Ferdinandi - Go Make Things: Advocates for vanilla JavaScript and progressive enhancement, arguing that the platform is now powerful enough for most use cases without framework overhead.

The Boring Technology Argument:

Dan McKinley's "Choose Boring Technology" makes a compelling case: "Let's say every company gets about three innovation tokens. You can spend these however you want, but the supply is fixed for a long while... If you choose to write your website in NodeJS, you just spent one of your innovation tokens. If you choose to use MongoDB, you just spent one of your innovation tokens."

In a human-centric development world, frameworks solved real problems: they provided structure, eliminated boilerplate, and made teams more productive. The innovation tokens were worth spending.

The AI Changes Everything:

But AI agents excel at exactly the things frameworks were designed to eliminate: boilerplate code, repetitive patterns, and consistent structure. As Anthropic's "Building Effective Agents" research shows: "Start with simple prompts and iterate... Most failures in production are due to over-engineering. Add complexity only when simpler solutions fall short."

The research continues: "Frameworks create extra layers of abstraction that can obscure the underlying prompts and responses, making them harder to debug and iterate on." And critically: "The most successful implementations... building with simple, composable patterns rather than immediately jumping to complex frameworks."

My take: Frameworks solved real problems in a human-centric development world. As AI agents become primary code generators, the trade-offs shift dramatically toward simpler, more transparent platforms where both humans and AI can reason about behavior. When your agent can generate perfect form validation in vanilla JavaScript just as easily as importing a library, the simpler solution wins. The platform has matured. The tools have changed. The innovation tokens are better spent elsewhere. ↩

-

The Architect Who Finally Learned - I spent years as that architect preaching YAGNI while secretly wishing I could build "the perfect abstraction." The turning point came when I had to maintain a "flexible, extensible" system I'd designed years earlier. Reading the code felt like archaeology—every abstraction had a story, but the stories were lost to time. Now I look for teams who can be pragmatic rather than clever. As Sandi Metz says: "prefer duplication over the wrong abstraction." ↩

-

Simple Made Easy - Rich Hickey's 2011 talk "Simple Made Easy" makes a crucial distinction: "Simple" means "one fold" (not intertwined), while "Easy" means "near at hand" (familiar). We often choose familiar complexity (easy) over unfamiliar simplicity (simple). A jQuery plugin might be "easier" (familiar, well-documented) than learning the platform API, but the platform API is "simpler" (fewer moving parts, one less dependency). Hickey argues we should optimize for simplicity because simple systems are more reliable, more maintainable, and easier to reason about over time. This distinction becomes critical as AI agents generate code—they can make complex systems "easy" by handling all the cognitive load, but that doesn't make the systems "simple." The question becomes: if AI removes the cost of complexity for humans, does complexity still matter? I believe it does, but for different reasons than before. ↩

P.S. @Stevey, I don't think I hit your blog rant length here, but I'll keep trying!